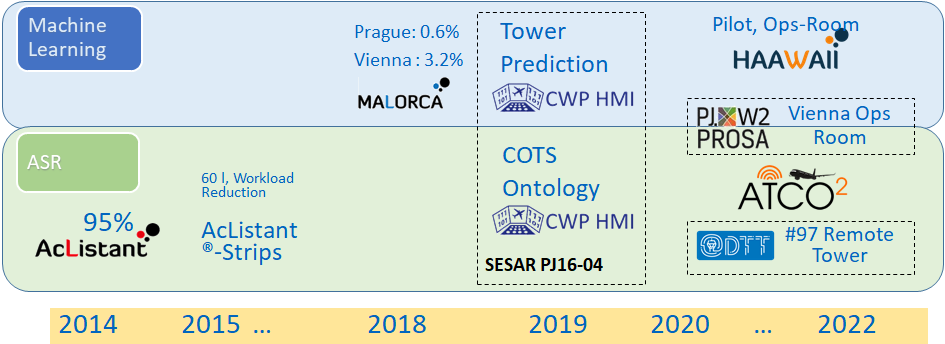

The HAAWAII project addresses both Automatic Speech Recognition for ATM applications and Machine Learning for training the needed Speech Recognition Models. The following figure shows the roadmap of Automatic Speech Recognition and Machine Learning.

Building on past research

The AcListant®-project conducted by DLR and Saarland University from 2013 to 2015 showed, that a sufficient recognition performance is possible to support controllers, if assistant-based speech recognition is used. Command recognition rates of 95% were achieved for Dusseldorf approach area.

The project AcListant®-Strips for the first time quantified the benefits of assistant-based speech recognition. Fuel reductions of 60 litres per flight and up to two landings more per hour were possible.

The MALORCA project (Machine Learning of Recognition Models for Controller Assistance), conducted by DLR, Saarland University, Idiap Research Institute, and the ANSPs from Austria and Czech Republic, has shown that a baseline speech recognizer can be trained by learning from surveillance data and the voice recordings. Command recognition errors rates below 0.6% and 3.2% were achieved for Prague and Vienna approach area, respectively.

In the SESAR2020 project CWP HMI, it was shown that command prediction is also possible in the tower environment and that commercial off-the-shelf tools can be connected to assistant based speech recognition approaches. In this setup command recognition errors rates were acceptable; whereas command recognition rates of the commercial-off-the shelf engines were only moderate.

Connections to other current projects

In parallel to HAAWAII other speech recognition projects are conducted within SESAR and the CleanSky framework, some are also funded by the German Government. The ATCO2 project aims at developing a unique platform allowing the collection and pre-processing of air traffic control (voice communication) data from air space. Preliminarily the project will consider the real-time voice communication between air-traffic controllers and pilots.

The SESAR projects PROSA and DTT are further projects in this area. PROSA aims to bring speech recognition applications in ATM from Technology Readiness Level (TRL) 4 to 6. DTT demonstrates for the first time that automatic speech recognition is also able to support tower controllers. It aims to achieve TRL 4. Both DTT and PROSA can benefit from the results of HAAWAII. The HAAWAII participants DLR, NATS, Austro Control, and CCL are also partners in PROSA and DTT. Idiap and BUT are also members of the ATCO2 project.

The STARFiSH project takes up the challenge of integrating Artificial Intelligence and Safety-First using speech recognition as a show case. Project partners are ATRiCS, DLR and Fraport AG.