DLR and BUT offered interactive online training sessions for the usage of HAAWAII segmentation, labelling, transcription, and data tools on January 19, 21, and 26, 2021.

16 air traffic controllers of the three ANSPs NATS, Isavia, and ACG participated in these sessions.

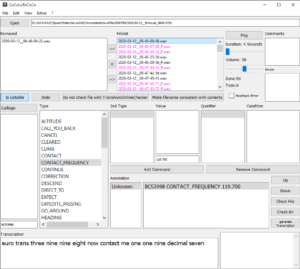

The training comprised of the tools SpokenData (see screenshot below) and CoCoLoToCoCo (see screenshot below) as well as some basics for data management with FTP (file transfer protocol) and SVN (subversion).

Thus, the HAAWAII team is now ready (1) to automatically segment ATC audio files, (2) to check this segmentation manually regarding the transcription rules (see here), (3) to automatically label the audio segments with “controller” or “pilot”, (4) to manually check the labelling, (5) to automatically perform speech-to-text conversion (pre-transcription) in a first version, (6) to manually check the transcription, and further to up-/download the data to the relevant repository considering all data security aspects. The automatic activities are done by Idiap/BUT, the manual checks are executed by ANSPs. These six steps enable to perform an automatic extraction of commands – annotation in ontology format (see here) – out of the transcriptions by DLR that will be a pre-requisite for further speech recognition applications. It is planned to iteratively process roughly 20 hours of pure ATC speech data with the above-described process during the next three months.